12 May 2021

Great thing about GraphQL is that we don’t need to rewrite all of our existing APIs to get started.

We can easily build a GraphQL layer and use resolvers to get our data from Apis, but in Apollo Servers, Apollo REST Data Source is probably the cleanest way to build this layer. It also offers some desired feature like caching, deduplication, and error handling on top.

To get started, we first need to get the apollo-datasource-rest npm package.

In this post, I will be showing how we can build a graphql server on top of free APIs provided by https://jsonplaceholder.typicode.com/

I will be using two resources posts and users

Inspecting the apis, we can see the response data structure:

GET https://jsonplaceholder.typicode.com/posts

[

{

"userId": 1,

"id": 1,

"title": "sunt aut facere repellat provident occaecati excepturi optio reprehenderit",

"body": "quia et suscipit...ostrum rerum est autem sunt rem eveniet architecto"

},

{

"userId": 1,

"id": 2,

"title": "qui est esse",

"body": "est rerum tempore vita..qui aperiam non debitis possimus qui neque nisi nulla"

},

...

]

GET https://jsonplaceholder.typicode.com/posts/1

{

"userId": 1,

"id": 1,

"title": "sunt aut facere repellat provident occaecati excepturi optio reprehenderit",

"body": "quia et suscipi tno .. ostrum rerum est autem sunt rem eveniet architecto"

}

GET https://jsonplaceholder.typicode.com/users/1

{

"id": 1,

"name": "Leanne Graham",

"username": "Bret",

"email": "Sincere@april.biz"

"phone": "1-770-736-8031 x56442",

"website": "hildegard.org"

}

First, we’ll create our graphql schema:

type Post {

id: Int!

userId: Int!

title: String!

body: String!

user: User

}

type User {

id: Int!

name: String!

username: String!

email: String!

}

and our query interface:

type Query {

post(id: Int!): Post

posts: [Post]

}

Next we create REST data source classes:

import { RESTDataSource } from 'apollo-datasource-rest';

export class PostsAPI extends RESTDataSource {

constructor() {

super();

this.baseURL = 'https://jsonplaceholder.typicode.com/';

}

async find(id: number) {

return this.get(`posts/${id}`);

}

async list() {

return this.get('posts')

}

}

export class UsersAPI extends RESTDataSource {

constructor() {

super();

this.baseURL = 'https://jsonplaceholder.typicode.com/';

}

async find(id: number) {

return this.get(`users/${id}`);

}

}

08 May 2021

A very basic introduction of gRPC could be - a modern RPC framework built on HTTP/2 that uses Protofbuf serialization instead of commonly used text based formats such as Json or XML.

RPC stands for “Remote Procedure Call”, bascially means “invoking a function on another system”. SOAP(Simple Object Access Protocol) is one of the popular example of RPC and was very popular in the 90s. Remote Procedure Call techniques are thus not new and the concept has been around for decades.

There are issues with SOAP and traditional RPC frameworks like usage of heavy XML formats and payloads, tight coupling, schema maintenance issues, poort performance and steep learning curve. REST architecture style emerged as new cool framework that solved many shortcomings of SOAP. REST has become one of the default and widely used framework for designing and developing APIs.

The question arises why again RPC instead of REST? There are already good amount of articles on this subject of gRPC vs REST. Instead of debating on this subject, I’ll be focusing on salient features and development of gRPC services.

gRPC is a modern RPC framework built at Google (thus ‘g’ in gRPC) and the protocol itself is built on HTTP/2. Using HTTP 2 under the hood, gRPC is able to optimize the network layer; unlike REST, SOAP or GraphQL, which must to use text-based data formats, gRPC uses the Protocol Buffers (Protobuf) binary format. This gives us certain pros like:

- Protobuf is extremely efficient on wire and gives high-performance, low-overhead messaging

- HTTP/2 supports any number of requests and responses over a single connection. Connections can also be bi-directional.

- Streaming requests and responses are first class

In addition, gRPC supports and introduces modern tools and ecosystems to support code generation, load balancing, tracing, health checking, and authentication and seamless interoperability between clients and services written in different languages.

Full gRPC Inroduciton & History: https://grpc.io/about/

gRPC can be a great performant option for multi-language microservice communications.

Getting started with gRPC in Go

Before we do anything, we need to get dependencies installed:

# Instructions for Mac OS

# You can find similar package for your OS

$ brew install protobuf # protocol buffer compiler; protoc

$ brew install protoc-gen-grpc-web # protoc plugin that generates code for gRPC-Web clients

$ brew install grpcurl # curl for gRPC servers

An example definiton for sample Echo service with method called Hello that echoes back the text param passed to it. The code is heavily commented so the code self explains what it is doing.

// use proto3 version of the protocol buffers language

syntax = "proto3";

// go package for generated go files

option go_package = "protos/";

// define a message type named Msg with field named Text of type string

// each field/attribute should be assigned a unique number

// These field numbers are used to identify fields in the message binary format, and should not be changed deployed

message Msg {

string Text = 1;

}

// define a RPC service named Echo

service Echo {

// defined a method for RPC service named Hello that takes a Msg and return a Msg

rpc Hello(Msg) returns(Msg);

}

Full Proto Language Guide: https://developers.google.com/protocol-buffers/docs/proto3

We can run protoc -I ./ *.proto --go_out=. --go-grpc_out=. to compile this proto file,

which will output two files echo.pb.go and echo_grpc.pb.go in protos folder. I am using Go as my primary language but single proto file works for over 12 programming languages.

Magically those file incorporates all the protobuf and grpc stuff into it. The files can be

intimidating to look at; but we don’t need to understand all of it at this moment.

According to our service definition, we can look out for 3 things that will be of our interest:

Definition of Msg, server defintion for EchoServer and client definition for EchoClient:

package protos

// ...

type Msg struct {

state protoimpl.MessageState

sizeCache protoimpl.SizeCache

unknownFields protoimpl.UnknownFields

Text string `protobuf:"bytes,1,opt,name=Text,proto3" json:"Text,omitempty"`

}

package protos

// ...

type EchoClient interface {

Hello(ctx context.Context, in *Msg, opts ...grpc.CallOption) (*Msg, error)

}

// ...

type EchoServer interface {

Hello(context.Context, *Msg) (*Msg, error)

}

It might be unbelievable but all we need to do to create our gRPC service is to implement these interfaces. That means we can focus on only and only on our business logic.

Read More...

19 Apr 2021

Complete source code containting snippets in this post is available at https://github.com/RohitRox/go-test-supporting-project

Golang Testing Level I: Unit Tests

// sample code for Post struct

package models

type Post struct {

Id int `json:"id"`

Title string `json:"title"`

Body string `json:"body"`

}

var validPostTitlePatt = regexp.MustCompile(`^\w+[\w\s]+$`)

func NewPostWithTitle(title string) (post Post, err error) {

if !validPostTitlePatt.MatchString(title) {

err = errors.New("title is required and only alpha-numeric characters and underscore are permitted in title")

}

post = Post{Title: title}

return

}

Simple unit testing with testing package:

// try to separate out test package

// it forces us to use packages as it will be used by its consumers

package models_test

import (

"testing"

m "go-test-supporting-project/models"

)

func TestPost(t *testing.T) {

// tabular structure for test data, pretty common in go world

testData := []struct {

title string

error bool

}{

{"Hello World", false},

{"Hello Testing 124", false},

{"Hello_World", false},

{"Hello World!", true},

{"Hello World - 124", true},

{"Hello@World", true},

}

for _, dat := range testData {

// t.Run for each test data

// func (t *T) Run(name string, f func(t *T)) bool

// t.Run runs f as a subtest of t called name.

t.Run(dat.title, func(t *testing.T) {

post, err := m.NewPostWithTitle(dat.title)

if dat.error {

if err == nil {

// use t.Errorf/t.Error to log and mark failed test

// use t.Fataf/t.Fatal to log and fast fail

// use t.Logf/t.Log to log only

// t.Skip to skip

// Full docs https://golang.org/pkg/testing/#pkg-index

t.Errorf("Expected error Got nil for post: %s", post.Title)

}

} else {

if err != nil {

t.Errorf("Unexpected error: %s for post: %s", err, post.Title)

}

}

})

}

}

Testable Examples:

package models_test

import (

"fmt"

m "go-test-supporting-project/models"

)

// use Example to run and verify example code

// a concluding line comment that begins with "Output:" and is compared with the standard output of the function when the tests are run

// Example snippets of Go code are also displayed as package documentation

// More info: https://blog.golang.org/examples

func ExamplePost() {

title := "Hello Testing 124"

post, err := m.NewPostWithTitle(title)

if err != nil {

fmt.Println("Invalid title")

fmt.Println(err)

}

fmt.Printf("Post initialied with title: %s", post.Title)

// Output:

// Post initialied with title: Hello Testing 124

}

Golang Testing Level I: Handler/Controller Tests

A Sample handler func:

import (

"fmt"

"net/http"

)

type Handler struct {}

func (h Handler) Status(w http.ResponseWriter, r *http.Request) {

w.WriteHeader(http.StatusOK)

fmt.Fprintf(w, "Status OK")

}

The httptest package provides a replacement for the ResponseWriter called ResponseRecorder. We can pass it to the handler and check how it looks like after its execution:

import (

"net/http"

"net/http/httptest"

"testing"

)

func TestStatusHandler(t *testing.T) {

t.Run("status check", func(t *testing.T) {

// initialize a new request

req, err := http.NewRequest("GET", "/anyroute", nil)

if err != nil {

t.Fatal(err)

}

h := Handler{}

// create a ResponseRecorder (which satisfies http.ResponseWriter) to record the response

rec := httptest.NewRecorder()

// call the handler

h.Status(rec, req)

// check status code

// can also check rec.Body for response body

if status := rec.Code; status != http.StatusOK {

t.Errorf("expected sattus code: %v got: %v",

http.StatusOK, status)

}

})

}

10 Apr 2021

Nil Slice Vs Empty Slice

// create a nil slice of integers

var arr []int

// create an empty slice of integers

arr := make([]int, 0)

Unnecessary blank identifier

// works just fine

for range something

{

run()

}

Checking inerfaces and types in runtime

type BroadcastIface interface {

Broadcast()

}

var temp interface{} = somevar

_, ok := temp.(BroadcastIface)

if !ok {

fmt.Println("somevar does not implement BroadcastIface")

}

Json Marshall type conversion

type person struct {

Name string `json:"name"`

Balance float64 `json:"balance,string"`

}

p := person{Name: "Steve", Balance: 200}

jsonData, _ := json.Marshal(p)

fmt.Println(string(jsonData))

// {"name":"Steve","balance":"200"}

// Note balance of type float64 is automatically converted into string

A case for empty struct

// an empty structure that occupies zero bytes of storage

// struct{}{}

// can be used instead of bool or int in done channel

done := make(chan struct{})

// ...

done <- struct{}{}

type CalcIface interface {

Add(a, b int) int

Sub(a, b int) int

}

// No receiver capture funcs, empty struct to group methods togetehr

type calc struct{}

func (calc) Add(a, b int) int { return a+b }

func (calc) Sub(a, b int) int { return a-b }

// only expose Calc and CalcIface

var Calc CalcIface = calc{}

05 Jan 2020

There has been a lot of talks and buzz around Microfrontends.

From https://micro-frontends.org/, Microfrontend systems are

Techniques, strategies and recipes for building a modern web app with multiple teams that can ship features independently.

The basic idea is to extend the idea of microservices to the frontend development through which a system can be divided into teams that own end to end system and independently deliver frontend applications to compose into a greater whole.

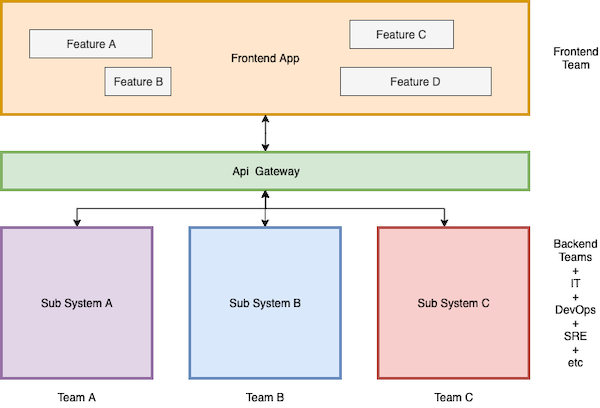

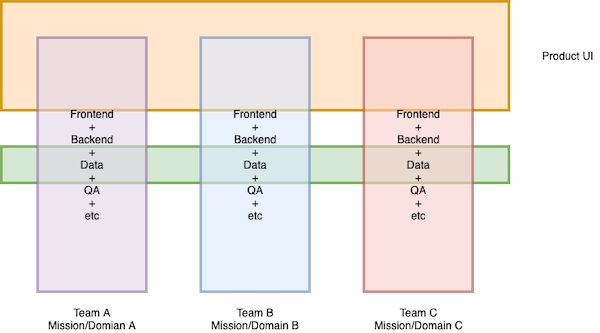

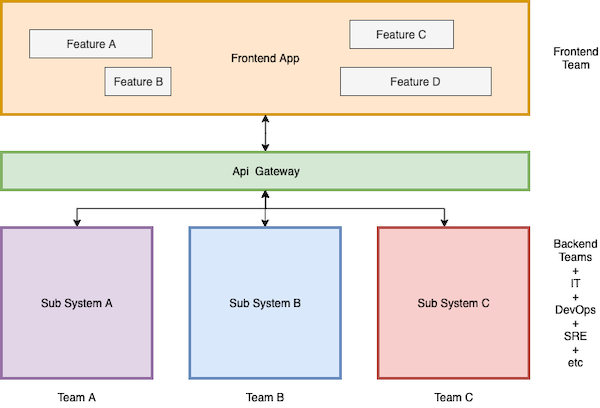

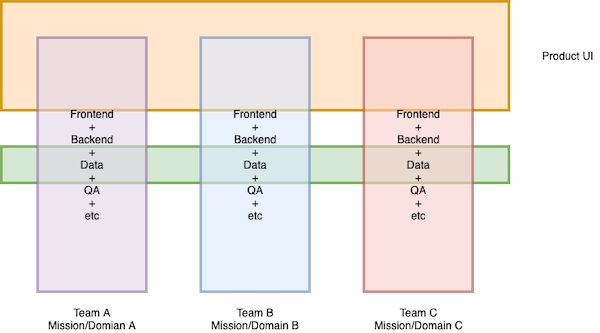

A simple illustration to explain the idea is shown as below:

Microservices with Monolith Frontend

Microservices with Micro Frontend

Microfrontends brings the same benefits like performance, incremental upgrades, decoupled codebases, independent deployments, autonomous teams to the frontend engineering like microservices bring to backend services.

Now the teams and the UI can be broken down smaller groups as shown in the pictures but the challenge is in integration and serving a unified experience to the users and there will always be cases where interfaces or interface components will collide as UI components can easily expand cross pages/domains.

There are already a few architectures being that are being used and proposed to achieve Microfrontend architecture. Cam Jackson’s post on Martin Fowler includes some nice approaches.

Let’s see how our take on microfrontends at Cloudfactory affects page organization.

Read More...